LanceDB's RaBitQ Quantization for Blazing Fast Vector Search

Introducing RaBitQ quantization in LanceDB for higher compression, faster indexing, and better recall on high‑dimensional embeddings.

Introducing RaBitQ quantization in LanceDB for higher compression, faster indexing, and better recall on high‑dimensional embeddings.

Build semantic video recommendations using TwelveLabs embeddings, LanceDB storage, and Geneva pipelines with Ray.

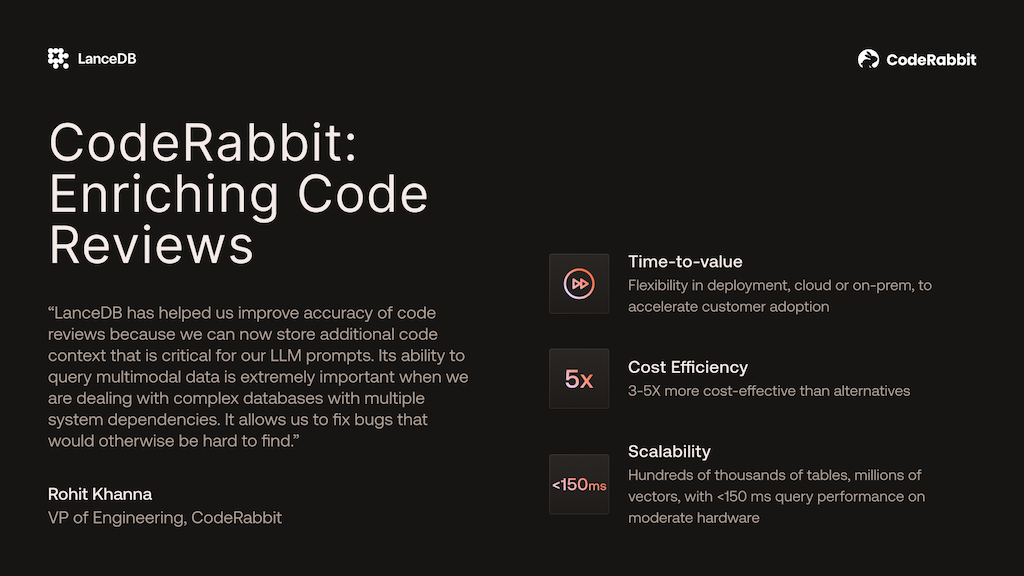

Our September newsletter highlights LanceDB powering Netflix's Media Data Lake, a case study on CodeRabbit's AI-powered code reviews, and updates on Lance Namespace and Spark integration.

Learn how to build real-time multimodal AI analytics by integrating Apache Fluss streaming storage with Lance's AI-optimized lakehouse. This guide demonstrates streaming multimodal data processing for RAG systems and ML workflows.

Learn how to productionalize AI workloads with Lance Namespace's enterprise stack integration and the scalability of LanceDB and Ray for end-to-end ML pipelines.

How CodeRabbit leverages LanceDB-powered context engineering turns every review into a quality breakthrough.

Learn how to build scalable feature engineering pipelines with Geneva and LanceDB. This demo transforms image data into rich features including captions, embeddings, and metadata using distributed Ray clusters.

No more Tantivy! We stress-tested native full-text search in our latest massive-scale search demo. Let's break down how it works and what we did to scale it.

Access and manage your Lance tables in Hive, Glue, Unity Catalog, or any catalog service using Lance Namespace with the latest Lance Spark connector.

Deep dive into LanceDB's dual structural encoding approach - mini-block for small data types and full-zip for large multimodal data. Learn how this optimizes compression and random access performance compared to Parquet.